Introduction

At Novactive, we are always excited to use new technologies and/or to improve our favorite technologies with other ones when it makes sense for us, for our clients and for the community.

Our business is web technologies, and the most professional content management system (CMS) for us is eZ Platform (previously eZ Publish). That's why we love creating connectors to this CMS. Our most recent eZ project is an image management plugin using Cloudinary.

If you are a web developer, whether or not you already use eZ Platform, Symfony, or Cloudinary, your website probably has some images, so take a look at what this cool connection can offer.

eZ Platform Quick Notes

If you aren't familiar with this CMS, you should have a look, but before we dive into the details about our Cloudinary integration, I just want to share in a few words the main reasons why I think eZ Platform is the best PHP CMS:

- Fully based on the Symfony PHP framework: eZ Platform is a Symfony application, as opposed to just using some Symfony components as others do. If you are a Symfony developer, you'll feel right at home.

- Decoupled CMS: eZ Platform separates the content creation process from the delivery process.

- Headless CMS: Thanks to the REST API, the presentation does not have to be handled by the CMS (but it can be)

- Mature: It's been around for more than 10 years.

- Supported: by eZ Systems

A Bit about Cloudinary

Cloudinary is a cloud-based, end-to-end media management solution that automates and streamlines your entire media asset workflow. It removes all the hassles you would normally need to handle for your site’s images and videos, including responsiveness, compression, manipulations, hosting, caching, delivery and more!

The benefits of Cloudinary go way beyond this plugin and we can't possibly cover them all here. In this post, we are going to focus specifically on the eZ plugin offering, where our main goal was to let eZ Platform developers enjoy Cloudinary's powerful image optimization capabilities and provide easy access to all of Cloudinary's manipulation features, including:

- Advanced resizing

- Smart detection-based cropping

- Face detection

- Instagram-like effects

- Sprite and CSS generation

- Transformation chaining

For example, check out just some of what you can do just by specifying a few resizing and face-detection parameters:

For more examples of the available manipulations, take a look at Cloudinary's image manipulation gallery, or the full list of all available options in the Cloudinary transformation reference.

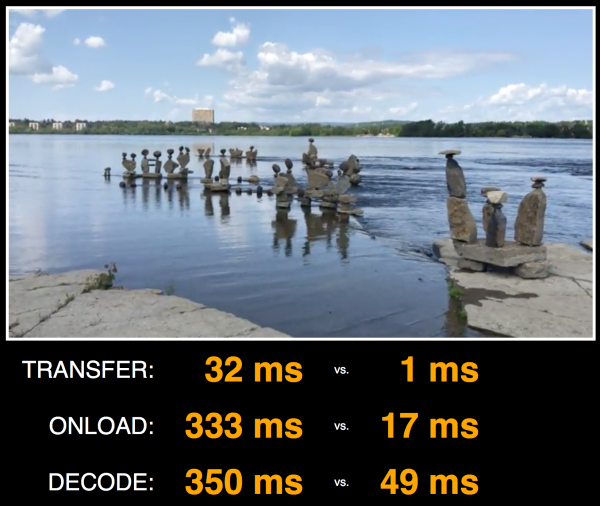

By taking advantage of the Cloudinary plugin, you decouple your application a bit more, you get amazing manipulation features instantly, and automatic optimizations that will give a significant boost to your page load performance!

Motivation

“Content is king” in a web or a mobile project. You always need images or videos with your content.

Then you need to optimize them, store them in different adapted versions (known as variations), host them all, cache them and deliver them.

But you don’t want to reinvent the wheel. Plus image management is not likely your domain of expertise, so it's probably not the area where want to invest your time and resources. That's why we decided to create a plugin that can add Cloudinary on top of an existing eZ website smoothly, with almost no development required.

Our requirements for this Minimum Viable Plugin were:

- Using Cloudinary with no change on the architecture

- No changes in the source code

- Original images stay on the eZ Platform local environment (in case you want to stop using Cloudinary in the future)

- The plugin should work on existing projects and provide Cloudinary’s manipulations and delivery features

So the requirements are simple in this MVP, and you still get to host the original image locally.

eZ Platform Default Image Handling

By default, eZ provides a concept of “variation” (previously known as “aliases”). This is a cool feature that many CMS still lack. It enables developers to make sure images are rendered in an optimized way in the various places they need to appear, for example: on a homepage at a certain size and on a detailed article page at a different size. This already helps prevents editors from loading super-heavy images directly into pages. But it's far from optimum and, as we will see, Cloudinary can do much better.

By default, these image variations are generated with LiipImagineBundle, using the underlying Imagine. It supports GD, Imagick or Gmagick PHP extensions, and allows you to define a flexible filter to convert your original images into multiple “variations.”

eZ also abstracts the file system. By default, variations are stored on the file system, but you can configure it to store those images in an AWS S3 bucket if you wish. Obviously, they are generated only once and cleared on demand (e.g. content removal).

Here is an example of a variation definition:

simple: reference: ~ filters: - { name: auto_rotate } - { name: geometry/scaledownonly, params: [128,128] } - { name: strip }

A more complex one:

complex: reference: ~ filters: - { name: auto_rotate } - { name: geometry/scaledownonly, params: [326,280] } - { name: geometry/crop, params: [326, 280, 0, 0] } - { name: background, params: { size: [230, 144], color: '#FFFFFF' } } - { name: strip }

This is a great start, but it's not enough, as your servers still need to manage the conversion, storage, delivery and caching. You are also limited to the PHP extension capabilities and the delivery capabilities of your servers/tools. And of-course you are lacking all the sophisticated manipulation features that a service like Cloudinary can provide.

Benefits of the MVP

The plugin will give you the ability to create variations based on Cloudinary features. In other words, every manipulation feature available in Cloudinary will be yours as soon as you finish the plugin installation.

There is no code to change, just the variations to define, and if you don’t define them, the plugin will fallback to the standard handling.

Example of a Cloudinary variation:

case: ezreference_variation: ~ filters: width: 710 width: 428 crop: 'fit' fetch_format: 'auto' effect: "brightness:200" radius: 'max'

And the "filters" key enables you to use the hundreds of possibilities and combinations provided by Cloudinary.

Plus:

- Images are automatically served through Cloudinary’s servers, and every image is optimized to deliver the best possible quality at the smallest possible file size based on the content of the image and the specific browser that each customer uses to view your content.

- No computation is done on your servers to convert images.

How to Install the Plugin

The package is open source and available on our Github here: https://github.com/Novactive/NovaeZCloudinaryBundle.

1) The installation is quite standard, using composer.

$ composer require novactive/ezcloudinarybundle

2) Register the bundle in your Kernel.

public function registerBundles() { ... $bundles = array( new FrameworkBundle(), ... new Novactive\Bundle\eZCloudinaryBundle\NovaeZCloudinaryBundle(), ); ... }

3) Set up your credentials. If you have not already done so, create a Cloudinary account. You can find your account credentials in the Cloudinary management console.

nova_ezcloudinary: authentification: cloud_name: "xxx" api_key: "xxxxx" api_secret: "xxxx"

4) Set up variation templates:

system: default: cloudinary_variations: simpletest1: ezreference_variation: 'Native eZ Variation Name, ~ means original' filters: # See Cloudinary documentation for available transformations: width: 200 height: 200 gravity: 'face' radius: 'max' effect: 'sepia'

5) Usage

NOTHING! That is another part of the beauty of eZ Platform. Your current template code should look like this:

{{ ez_render_field( content, "image",{

"parameters": {"alias": 'simpletest1'},

"attr" : { "class" : "img-responsive" }

}

) }}At this point, the plugin automatically takes over and the function nova_ezcloudinary_alias will be used instead of ez_image_alias. The bundle falls back on the native variation system if the alias name does not exist in cloudinary_variations.

So basically there is no change in your code, just a yaml configuration for your variations.

How Does it Work?

The MVP uses the Cloudinary fetch feature:

The nova_ezcloudinary_alias will change the source of the image (at rendering) and generate a URL such as:

Note: Cloudinary also offers a personalized CNAME URL adapted to your own domain option (High tier plans).

The first time the image is requested, Cloudinary automatically fetches the original image from your eZ storage, stores it in a relative path in your Cloudinary account, and then performs the requested manipulations so that all the variations are then hosted by Cloudinary and served via CDN.

Dynamic Responsive Images

Once you have Cloudinary at your service, you can use its capabilities to more easily deliver responsive images. There are three ways to do this:

Dynamic image manipulation - Use Cloudinary to generate transformed versions of images. Then use the HTML srcset attribute, enabling the browser to choose which image versions to display based on the device hosting the browser.

Automating responsive images with JavaScript (client side) - Programmatically set the <img> src URL.

Automating responsive images with Client Hints (server side) - Deliver the optimal image based on the available width reported in the Client Hints request header. But this is not available on all browsers.

We usually go with the src and srcset attribute of the <img> HTML tag as it does not require Javascript.

Simply speaking, srcset provides to the browser a set of URLs (variations) to use depending on the viewport size. The browser takes the information, combines them with the window width and screen density it already knows and does its job!

To exploit this feature with eZ, you just have to override the ezimage template to make it even more dynamic, using the following two steps:

- Create variations for each size:

- myvariationname

- myvariationname_1x

- myvariationname_2x

- myvariationname_3x

- Change the template that renders images, and adapt.

Here's the code:

{% block ezimage_field %}

{% spaceless %}

{% if not ez_is_field_empty( content, field ) %}

{% set aliasName = parameters.alias|default( 'original' ) %}

{% set imageAlias = nova_ezcloudinary_alias( field, versionInfo, aliasName ) %}

{% set src = imageAlias ? asset( imageAlias.uri ) : "//:0" %}

{% set width = parameters.width is defined ? parameters.width : imageAlias.width %}

{% set height = parameters.height is defined ? parameters.height : imageAlias.height %}

{% if aliasName == 'original' %}

{% set densities = [] %}

{% set densitiesSizes = [] %}

{% else %}

{% set densities = ['1x', '2x', '3x'] %}

{% set densitiesSizes = ['640w', '1040w', '1560w'] %}

{% endif %}

<img {{ block( 'field_attributes' ) }}

src="{{ src }}"{% if width %}

width="{{ width }}"{% endif %}{% if height %}

height="{{ height }}"{% endif %}

alt="{{ field.value.alternativeText }}"{% if parameters.class is defined and parameters.class is not empty %}

class="{{ parameters.class }}"{% endif %}

{% if densities|length > 0 %}

srcset="{% for density in densities %}{{ ez_image_alias( field, versionInfo, parameters.alias~'_'~density ).uri }} {{ densitiesSizes[loop.index0] }}{% if not loop.last %},{% endif %}{% endfor %}"

{% endif %}

/>

{% endif %}

{% endspaceless %}

{% endblock %}With the above, you will end up with a generated HTML something like this:

<img class="ezimage-field" src="https://cloudinaryURL_for_myvariationname” srcset=" https://cloudinaryURL_for_myvariationname_1px 640w, https://cloudinaryURL_for_myvariationname_2px 1040w, https://cloudinaryURL_for_myvariationname_3px 1560w" />

That's it! If you want to know more about srcset and sizes, check out this great ycombinator responsive images article.

What's Next?

What's Next? Well, that's for you to determine. We invite you to create a free Cloudinary account, install the plugin, and start to play with the images on your eZ site. We have a lot of big ideas for the next versions of this initial eZ-Cloudinary MVP, but first and foremost, we want to hear your ideas and see where you take it.

You are all welcome to contribute!

About Novactive

Novactive (Nextedia Group) is a web agency that develops digital platforms. Founded in 1996, Novactive is the brainchild of several web aficionados, whose combined vision of integrity and purpose drives every business decision. In 2016, Novactive joined the Nextedia Group. Today Novactive with Nextedia is comprised of a highly experienced multidisciplinary team with more than 200 digital experts based in Paris, Toulon and San Francisco.

Sébastien Morel is the CTO of Novactive, head of U.S. operations and technology, and runs the California office. Sébastien has been with the group for over 14 years.

“I love to take different web technologies and mix them together to get the best of them for our clients, but also to improve the developer and user experience. Open sourcing MVPs and packages is part of our core values. Sharing best practices and implementations, and allowing others to participate is THE way to build quality.”

Sébastien Morel is the CTO of Novactive, head of U.S. operations and technology, and runs the California office. Sébastien has been with the group for over 14 years.

“I love to take different web technologies and mix them together to get the best of them for our clients, but also to improve the developer and user experience. Open sourcing MVPs and packages is part of our core values. Sharing best practices and implementations, and allowing others to participate is THE way to build quality.”

Novactive are part of Cloudinary's partnership network. We at Cloudinary value our partners. If you are interested to become a Cloudinary partner check out our partnership program.

love and

love and  hate animated Gifs.

hate animated Gifs.