I'll start by giving it to you straight:

As part of the recent "auto–everything" launch, we introduced two new transformation parameters – dpr_auto and w_auto, which pair the DPR and Width Client Hints with Cloudinary’s existing image resizing and delivery infrastructure, in order to serve up simple, automatic responsive images.

If you’ve spent any time wrestling images into responsive layouts you know that those words — “simple”, “automatic”, and “responsive images” — are rarely found in honest proximity. So, take a quick peek at the demo and allow me give you a glimpse into the future of images on the web.

Mo' resources, mo' problems

Along with media queries and fluid grids, “flexible images” are one of the tentpoles of Ethan Marcotte’s Responsive Web Design. And, as Ethan pointed out in the seminal first edition of his book, achieving visually flexible images is as easy as:

img { max-width: 100%; }For that rule to work though, the image resources backing it up must be big enough to fill large viewports and high-resolution displays. Shoving single-src images into responsive layouts means sending gigantic resources to everyone. Which is a performance disaster.

And so, along came a suite of new HTML features which allow images to adapt, ensuring that each user gets a resource that is tailored to their particular context. These features implement a key idea: authors should be able to mark up multiple, alternate resources. These features let us transform this bit of ham-fisted responsiveness:

<img src="enormous.jpg" alt="Worst-case performance scenarios for all" />

…into an adaptable, multi-headed Hydra:

<img sizes="100vw" srcset="tiny.jpg 320w, small.jpg 512w, medium.jpg 640w, large.jpg 1024w, huge.jpg 1280w, enormous.jpg 2048w" src="fallback.jpg" alt="To each according to his ability" />

But this adaptability comes at a price, paid in complexity. Generating, managing, and marking-up two or three (or six) “multiple, alternate” resources for every image can be both tedious and fiendishly complex. Services like Cloudinary can automate the resource generation side of the equation. But what to do about all of that markup?

Considering JavaScript

One answer to that question — one that we have embraced and implemented — is: use JavaScript.

Our last example offered the browser an array of options, allowing it to pick the best resource for the current context. A smart bit of JavaScript can directly measure the browsing context, and — when paired with on-the-fly, server-side resizing — request a single, perfectly-sized resource every time, with little or no extra markup required.

But... There are some problems with this approach. First, it requires setting up some JavaScript infrastructure, which is a complexity of a different flavor. Second, we’re inserting JavaScript between users and our pages’ core content, which can be tricky to do well. Worst of all, though — as it turns out — the smart people that make browsers for a living have noticed that images account for 64% of the web’s bytes, and they have gone to Herculean lengths to get those bytes across the wire as quickly as possible. Their main tool in that quest: the speculative pre-parser. In a nutshell, it tries to kick off as many image loads as possible[1] before a page’s HTML has even finished parsing. This results in an enormous 20% speed boost, and an intractable problem, when it comes to responsive images.

You see, for a JavaScript to load made-to-measure image resources, it must first measure the page — which means waiting until page layout is complete. This happens long after the pre-parser has raced through the HTML, kicking off image loads with wild abandon. This means that if we wish to use JavaScript to load responsive images, we face a choice. We must either:

- let the pre-parser do its thing, and, after a lengthy delay, set our Javascript loose to double-load all of our images, or,

- sabotage the pre-parser by authoring invalid,

src-less<img>s so that our JavaScript can, after that same delay, start loading our pages’ images last, instead of first.

Both options present significant compromises; both set us back from achieving our over-arching goal, which is not, after all, simply to load appropriately-sized images. Our larger goal is performance.

Enter Client Hints

So, where does that leave us? Performant, flexible images are inherently complex; marking up multiple, alternate resources can get verbose and complicated; trying to solve the problem with Javascript is fraught with compromise. What other tools do we have at our disposal? Where else can we shift the burden of this complexity?

The idea of Client Hints is: let’s put it on the server! If you’re a front-end developer, Client Hints means making responsive images somebody else’s problem. Cloudinary’s pitch to you, front-end-developer, is: make responsive images our problem! Leverage our Automatic Width and Automatic DPR features and we’ll handle this stuff so that you don’t have to.

Automatic DPR

Here’s a simple, live example:

cl_image_tag("bike.jpg", :width=>512, :dpr=>"auto", :use_root_path=>true, :client_hints=>true)

cl_image_tag("bike.jpg", array("width"=>512, "dpr"=>"auto", "use_root_path"=>true, "client_hints"=>true))

CloudinaryImage("bike.jpg").image(width=512, dpr="auto", use_root_path=True, client_hints=True)

cloudinary.image("bike.jpg", {width: 512, dpr: "auto", use_root_path: true, client_hints: true})

cloudinary.url().transformation(new Transformation().width(512).dpr("auto")).clientHints(true).useRootPath(true).imageTag("bike.jpg")

$.cloudinary.image("bike.jpg", {width: 512, dpr: "auto", use_root_path: true, client_hints: true})

cloudinary.Api.UrlImgUp.Transform(new Transformation().Width(512).Dpr("auto")).ClientHints(true).UseRootPath(true).BuildImageTag("bike.jpg")

The above URL delivers different resources to different users, depending on their context. In browsers that support Client Hints (at the moment, that’s mostly just Chrome), 1x devices will receive 1x resources; 2x screens will receive 2x resources. The above URL responds to varying DPRs automatically.

How? There’s a little tag in the <head> of this page which looks like this:

<meta http-equiv="Accept-CH" content="DPR">

That tells the browser to tack an extra HTTP header named DPR onto all of its subsequent requests, advertising the current devicePixelRatio. Those DPR HTTP headers are Client Hints. And smart servers can use them to tailor custom, smart responses. And so, when we opt-in to sending DPR Hints with a little meta magic, and add dpr_auto to our URL, we’re able to deliver different resources to different users depending on the density of their display.

Why else might we want to vary the scale of a resource in response to a fact about the browsing context? I’ll tell you why: to fit flexible, responsive layouts!

Automatic Width

Just like dpr_auto, w_auto sends different users different resources from the same URL. But whereas dpr_auto scales images to fit different display densities, w_auto scales images to fit variable layout widths. In its simplest form, w_auto looks like this:

<meta http-equiv="Accept-CH" content="DPR, Width"> […snip…] <img sizes="100vw" src="http://res.cloudinary.com/demo/w_auto/bike.jpg" alt="Smiling girl with a bike." />

What have we done here?

First, we’ve used our meta tag to opt the browser into sending another Client Hint along with its requests: Width.

Next, note that our img includes a sizes attribute. This attribute tells the browser the layout width of the image [2] The browser then broadcasts that width to the server, via the Width hint. No sizes, no Width. No Width? w_auto does nothing. So (besides the opt-in meta tag), this is the one additional thing that w_auto asks of you: for it to work, you’ll have to stick sizes on your imgs.

Finally, note that I didn’t include dpr_auto in the URL. I didn’t have to! If we opt into sending both DPR and Width Hints in the meta tag up top, w_auto images will be scaled based on both of them.

So — that’s w_auto at its simplest; in Chrome, it just works. But, especially if you’re using the feature in production, you should dive a little deeper.

Advanced w_auto usage

w_auto can take two optional parameters:

cl_image_tag("bike.jpg", :width=>"auto:100:400", :use_root_path=>true, :client_hints=>true)

cl_image_tag("bike.jpg", array("width"=>"auto:100:400", "use_root_path"=>true, "client_hints"=>true))

CloudinaryImage("bike.jpg").image(width="auto:100:400", use_root_path=True, client_hints=True)

cloudinary.image("bike.jpg", {width: "auto:100:400", use_root_path: true, client_hints: true})

cloudinary.url().transformation(new Transformation().width("auto:100:400")).clientHints(true).useRootPath(true).imageTag("bike.jpg")

$.cloudinary.image("bike.jpg", {width: "auto:100:400", use_root_path: true, client_hints: true})

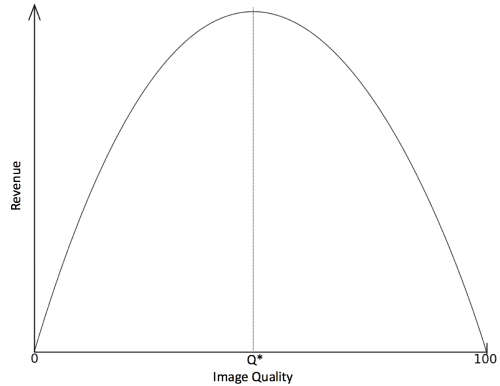

cloudinary.Api.UrlImgUp.Transform(new Transformation().Width("auto:100:400")).ClientHints(true).UseRootPath(true).BuildImageTag("bike.jpg")The first (:100) tells Cloudinary how big the “jumps” between alternate resources should be. If you set this to :1, resources will be scaled to match the layout width exactly — which as it turns out, is a bad idea. To deliver perfectly-scaled resources, our servers must render and store hundreds, maybe thousands of alternate versions of your image, which is both computationally expensive and terrible for your pages’ performance. More, alternate resources means fewer cache hits, and a hit at any level — in the local browser cache, on the CDN, or server-side — will always be significantly faster than a miss. Thus, this first parameter allows you to limit the number of possible responses by defining a “rounding step” between them. For example, here, if the target width is 452 pixels, Cloudinary will round up to the nearest hundred and return a 500-pixel-wide resource.

The second parameter (:400) serves as a fallback Width, which will be used if the browser doesn’t send a Width Hint. In this example, browsers that don’t support Client Hints are served a 400-pixel-wide image. (400 is a “mobile-first” default. If you would rather serve a “desktop-first” default to non-supporting browsers, set this to something higher, like 1000). Word to the wise: without a Width or this fallback, w_auto images will be delivered at their original size. If you’re working with large originals — especially given Client Hints’ nascent support — this could be disastrous! You do not want to start sending 12 megapixel originals to Mobile Safari.

There is one more thing to know about these parameters; I’ve saved w_auto’s best trick for last.

Automatic breakpoints

Picking an arbitrary step-size using the first parameter can feel, well, confusingly arbitrary. Four years ago, Jason Grigsby pointed out that it makes more sense to think about these steps in terms of bytes, rather than pixels. We agreed, and built a tool that implements Jason’s idea — the Responsive Image Breakpoints Generator. The tool was built on API calls that are available to everyone with a Cloudinary account; we’ve now built that same logic into w_auto.

Our servers can look at your images, see how they compress, and determine byte-size-based gaps between images for you if you set the steps parameter to a special value: :breakpoints.

w_auto:breakpoints packages up a lot of smart thinking and builds on years’ worth of foundational effort to provide an astoundingly automatic responsive images solution that’s smart not only about images’ context — but also their particular content. Alas, :breakpoints requires a bit of gymnastics at the CDN layer and at the moment, you’ll need to contact us to set it up for your account.

Speaking of the imperfect present…

Let’s talk about Client Hints’ browser support

Right now, Client Hints are only supported in Chrome and Opera. That means that dpr_auto and w_auto will work for approximately half of your users[3].

Other browsers have been slow to follow Google’s lead. Like every new web platform feature, Client Hints faces a chicken-and-egg problem. Vendors don’t want to implement features in browsers until they see a clearly-expressed demand; authors don’t want to use features on their pages until they enjoy almost universal support.

dpr_auto and w_auto are, among other things, our attempt to nudge Client Hints forward. We developed these features and are advocating for their adoption because we believe fully-automated, drop-dead simple, responsive, and performant images should not be the exception, but rather the norm.

So, should you use them?

If you’re starting a new project or retrofitting an old one, doing something about responsive images should be at the top of your to-do list. If you’re willing to put in the effort, a markup-based solution will get you the most browser support, but automating that markup can be complicated. JavaScript-based solutions are simpler, but operate within significant performance constraints.

Client Hints pave a third path, which is both simple and performant. And, despite browsers’ uneven Client Hints support, w_auto and dpr_auto provide a lot of value, right now.

The vast majority of websites aren’t doing anything about responsive images; most developers are still sending a single, wasteful, high-resolution resource to everyone. Implementing dpr_auto and w_auto requires next-to-no effort and delivers fully-responsive images to around half of your visitors without negatively impacting the other half. They provide tremendous performance gains now, and those gains will grow as browser support improves.

Client Hints are where the puck is going for images on the web. Sign up for a free account and start using them, today.

Further reading

2,000 words in and you still want more? I encourage you to dive into the following resources:

- Cloudinary's official automatic responsive images documentation, featuring:

-

dpr_auto -

w_auto - A special shout-out to

w_auto:breakpoints

-

- Leaner Responsive Images with Client Hints, by Jon Arne Sæterås

- Implementing responsive images with Cloudinary & Javascript

- Implementing responsive images with Cloudinary & HTML

[1] Speculative pre-parsers initiate 43% of all image loads

[2] Sizes takes CSS lengths and optionally pairs them with media queries. A complete account of its abilities is out of scope for this article, but suffice to say, sizes (especially in combination with viewport relative units, the CSS calc function, and media queries), can express just about any dynamic layout width that you can dream up. Based on the information in sizes, browsers (and their speculative pre-parsers) can figure out how many px the image will occupy on the layout without knowing about, or needing to load, any other piece of the page.

[3] Depending on your audience, it could actually be a lot higher! For instance: 70% of our readers here on the Cloudinary blog use Chrome.

Rectangles showing the relative dimensions of a group of

Rectangles showing the relative dimensions of a group of  Rectangles showing the relative dimensions of three groups of

Rectangles showing the relative dimensions of three groups of  Rectangles showing the relative dimensions of a group of

Rectangles showing the relative dimensions of a group of  Rectangles showing the relative dimensions of a group of

Rectangles showing the relative dimensions of a group of